All Programming is Web Programming

Michael Braude decries the popularity of web programming:

The reason most people want to program for the web is that they’re not smart enough to do anything else. They don’t understand compilers, concurrency, 3D or class inheritance. They haven’t got a clue why I’d use an interface or an abstract class. They don’t understand: virtual methods, pointers, references, garbage collection, finalizers, pass-by-reference vs. pass-by-value, virtual C++ destructors, or the differences between C# structs and classes. They also know nothing about process. Waterfall? Spiral? Agile? Forget it. They’ve never seen a requirements document, they’ve never written a design document, they’ve never drawn a UML diagram, and they haven’t even heard of a sequence diagram.

But they do know a few things: they know how to throw an ASP.NET webpage together, send some (poorly done) SQL down into a database, fill a dataset, and render a grid control. This much they’ve figured out. And the chances are good it didn’t take them long to figure it out.

So forgive me for being smarmy and offensive, but I have no interest in being a ‘web guy.’ And there are two reasons for this. First, it’s not a challenging medium for me. And second, because the vast majority of Internet companies are filled with bad engineers - precisely because you don’t need to know complicated things to be a web developer. As far as I’m concerned, the Internet is responsible for a collective dumbing down of our intelligence. You just don’t have to be that smart to throw up a webpage.

I really hope everybody’s wrong and everything doesn’t “move to the web.” Because if it does, one day I will either have to reluctantly join this boring movement, or I’ll have to find another profession.

Let’s put aside, for the moment, the absurd argument that web development is not challenging, and that it attracts sub-par software developers. Even if that was true, it’s irrelevant.

I hate to have to be the one to break the bad news to Michael, but for an increasingly large percentage of users, the desktop application is already dead. Most desktop applications typical users need have been replaced by web applications for years now. And more are replaced every day, as web browsers evolve to become more robust, more capable, more powerful.

You hope everything doesn’t “move to the web”? Wake the hell up! It’s already happened!

Any student of computing history will tell you that the dominance of web applications is exactly what the principle of least power predicts:

Computer Science spent the last forty years making languages which were as powerful as possible. Nowadays we have to appreciate the reasons for picking not the most powerful solution but the least powerful. The less powerful the language, the more you can do with the data stored in that language. If you write it in a simple declarative from, anyone can write a program to analyze it. If, for example, a web page with weather data has RDF describing that data, a user can retrieve it as a table, perhaps average it, plot it, deduce things from it in combination with other information. At the other end of the scale is the weather information portrayed by the cunning Java applet. While this might allow a very cool user interface, it cannot be analyzed at all. The search engine finding the page will have no idea of what the data is or what it is about. The only way to find out what a Java applet means is to set it running in front of a person.

The web is the very embodiment of doing the stupidest simplest thing that could possibly work. If that scares you – if that’s disturbing to you – then I humbly submit that you have no business being a programmer.

Should all applications be web applications? Of course not. There will continue to be important exceptions and classes of software that have nothing to do with the web. But these are minority and specialty applications. Important niches, to be sure, but niches nonetheless.

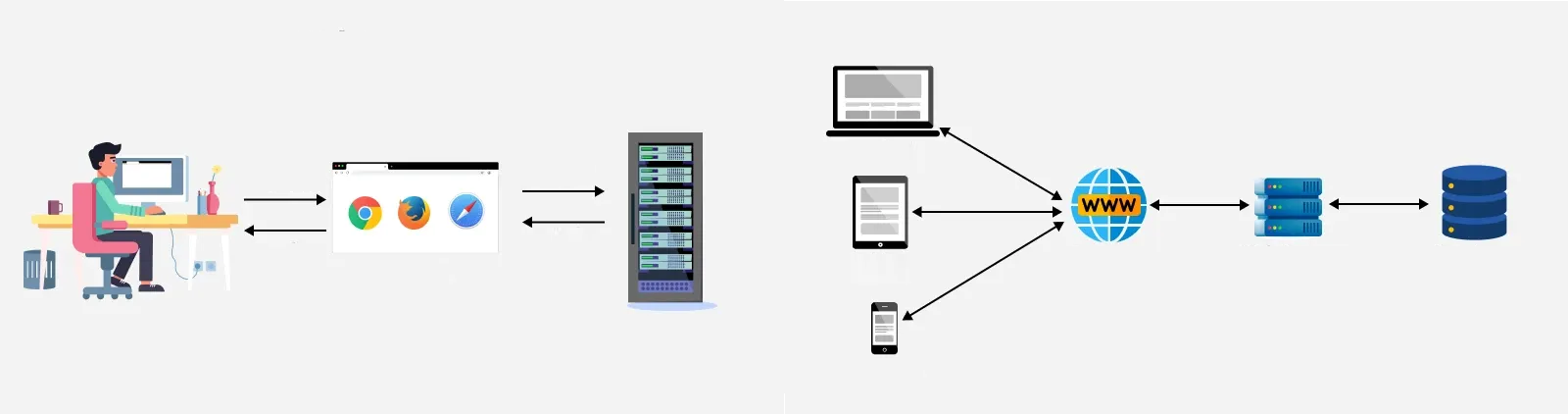

If you want your software to be experienced by as many users as possible, there is absolutely no better route than a web app. The web is the most efficient, most pervasive, most immediate distribution network for software ever created. Any user with an internet connection and a browser, anywhere in the world, is two clicks away from interacting with the software you wrote. The audience and reach of even the crappiest web application is astonishing, and getting larger every day. That’s why I coined Atwood’s Law.

Atwood’s Law: any application that can be written in JavaScript, will eventually be written in JavaScript.

Writing Photoshop, Word, or Excel in JavaScript makes zero engineering sense, but it’s inevitable. It will happen. In fact, it’s already happening. Just look around you.

As a software developer, I am happiest writing software that gets used. What’s the point of all this craftsmanship if your software ends up locked away in a binary executable, which has to be purchased and licensed and shipped and downloaded and installed and maintained and upgraded? With all those old, traditional barriers between programmers and users, it’s a wonder the software industry managed to exist at all. But in the brave new world of web applications, those limitations fall away. There are no boundaries. Software can be everywhere.

Web programming is far from perfect. It’s downright kludgy. It’s true that any J. Random Coder can plop out a terrible web application, and 99% of web applications are absolute crap. But this also means the truly brilliant programmers are now getting their code in front of hundreds, thousands, maybe even millions of users that they would have had absolutely no hope of reaching pre-web. There’s nothing sadder, for my money, than code that dies unknown and unloved. Recasting software into web applications empowers programmers to get their software in front of someone, somewhere. Even if it sucks.

If the audience and craftsmanship argument isn’t enough to convince you, consider the business angle.

You’re doing a web app, right? This isn’t the 1980s. Your crummy, half-assed web app will still be more successful than your competitor’s most polished software application.

Pretty soon, all programming will be web programming. If you don’t think that’s a cause for celebration for the average working programmer, then maybe you should find another profession.