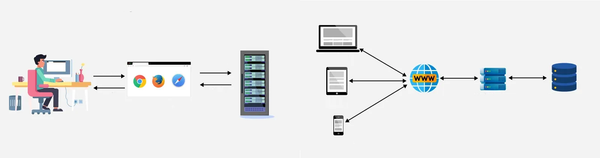

storage

The State of Solid State Hard Drives

I’ve seen a lot of people play The Computer Performance Shell Game poorly. They overinvest in a fancy CPU, while pairing it with limited memory, a plain jane hard drive, or a generic video card. For most users, that fire-breathing quad-core CPU is sitting around twiddling its virtual thumbs