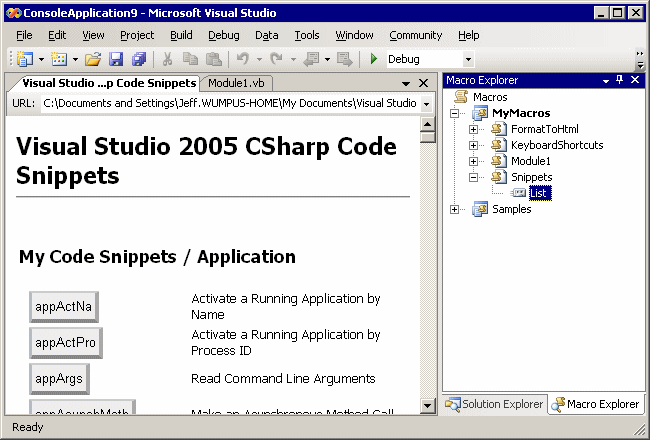

browser

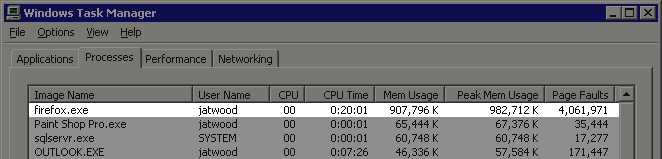

Firefox Excessive Memory Usage

I like Firefox. I’ve even grown to like it slightly more than IE6, mostly because it has a far richer add-on ecosystem. But I have one serious problem with Firefox: This screenshot was taken after a few days of regular Firefox usage. That’s over 900 megabytes of memory