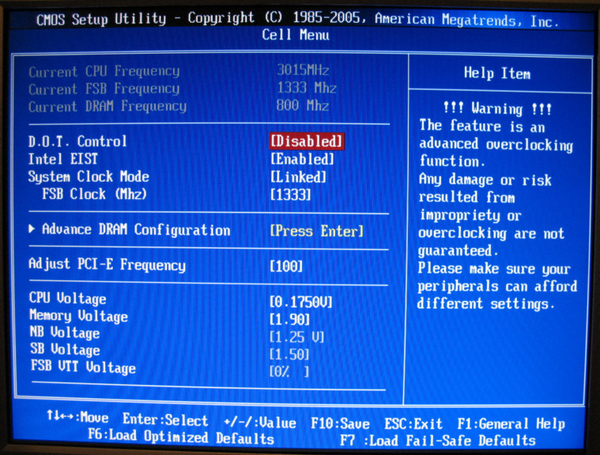

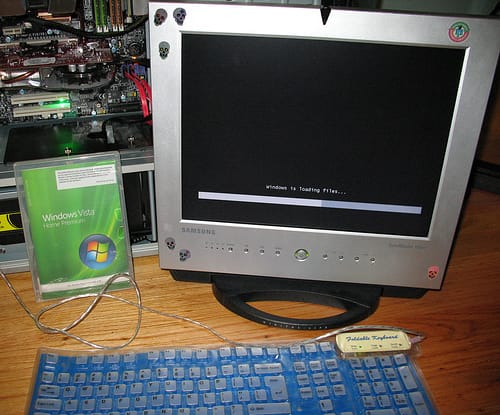

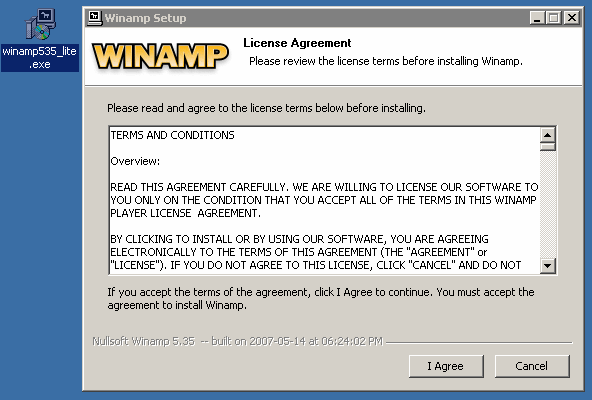

installation process

What’s Wrong With Setup.exe?

Ned Batchelder shares a complaint about the Mac application installation process: Here’s what I did to install the application Foo [on the Mac]: 1. Downloaded FooDownload.dmg.zip to the desktop. 2. StuffIt Expander launched automatically, and gave me a FooDownload.dmg Folder on the desktop. 3. At this