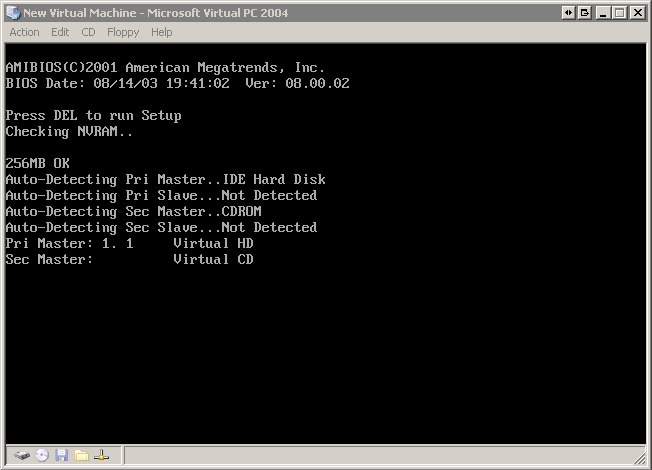

virtual machine

Virtual PC 2004

This won’t be news to a lot of you, but I was playing around with Microsoft Virtual PC 2004 today: And it’s very cool. I know, I know, I’m probably the last developer on the planet to get wise to the benefits of virtual machine technology. In