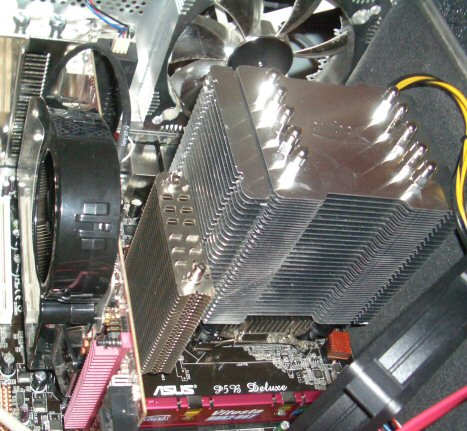

cooling

My Giant Heatsink Fetish

One side effect of building quiet PCs is that you tend to develop a giant heatsink fetish. From left to right: * Arctic Cooling Accelero X1 on the ATI 1900XTX video card * Thermalright HR-05 on the Intel 965 northbridge chipset * Scythe Infinity on the Core 2 Duo CPU It pains me