programming languages

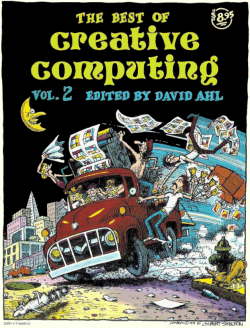

The Best of Creative Computing

In the process of researching a few recent blog entries, I found the amazing Atari Archives. The title is a little misleading; it isn’t completely Atari specific. The archives contain incredible page-by-page high resolution images of many classic computer books, including The Best of Creative Computing, volume 1 (1976)