design

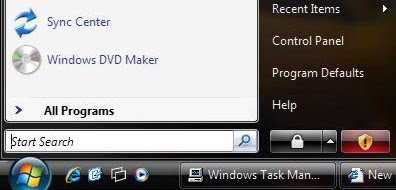

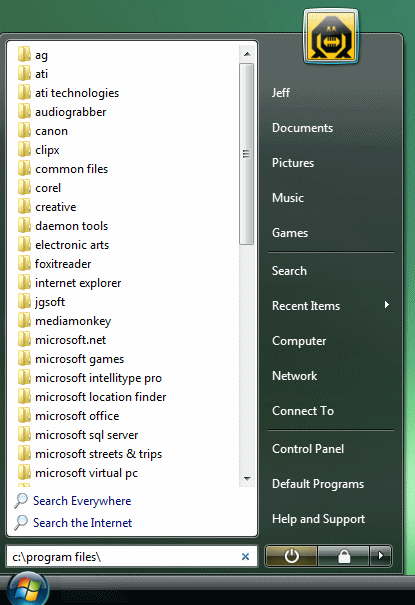

There Are No Design Leaders in the PC World

Robert Cringley’s 1995 documentary Triumph of the Nerds: An Irreverent History of the PC Industry features dozens of fascinating interviews with icons of the software industry. It included a brief interview segment with Steve Jobs, where he said the following: The only problem with Microsoft is they just have