programming languages

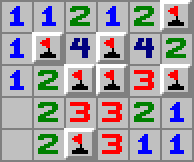

Programming Games, Analyzing Games

For many programmers, our introduction to programming was our dad forcing us to write our own games. Instead of the shiny new Atari 2600 game console I wanted, I got a Texas Instruments TI-99/4a computer instead. That’s not exactly what I had in mind at the time, of