I recently had the opportunity to rebuild my work PC. It strongly resembles the "Little Bang" D.I.Y. system I outlined in my previous post on the philosophy of building your own computer.

See, I do take my own advice.

Here's a quick breakdown of the components and the rationale behind each. Every aspect of this system has been a blog post at one point or another.

- ASUS Vento 3600 case (green)

Is there anything more boring than a beige box? The Vento is a little aggravating to work on, and it's a bit bulky. But it's unique, a total conversation starter, and the sparkly green model fits the Vertigo color scheme to a T. I even built my wife a PC using the red Vento. The 3600 has been discontinued in favor of the 7700; the newest version is, sadly, much uglier.

- MSI P6N SLI, NVIDIA 650i chipset

The 650i is a far more economic variation of the ridiculously expensive NVIDIA 680i chipset, but offering the same excellent performance. Dual PCI express slots, for two video cards, is a must in my three-monitor world. It also has a fairly large, passive thin-fin northbridge cooler; quality of the motherboard chipset cooling is important, because modern motherboard chipsets can dissipate upwards of 20-30 watts all by themselves. And it still runs blazingly hot, even at idle.

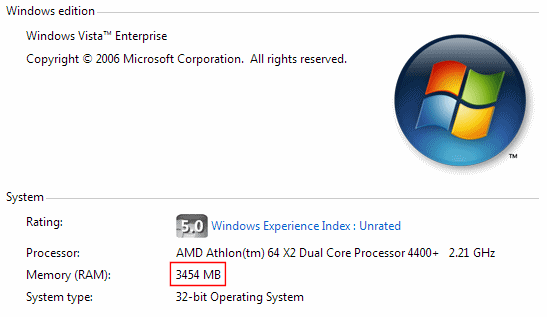

- Intel Core 2 Duo E6600

The Core 2 Duo is Intel's best processor in years. I opted for the E6600 because I have an unnatural love for large L2 caches, but even the cheapest Core Duo 2 runs rings around the competition. And all the Core 2 Duos overclock like mad. This one is running at 3 GHz with a very minor voltage bump for peace of mind.

- Antec NeoHE 380 watt power supply

Great modular cable power supply, with around 80% efficiency at typical load levels. It's extremely quiet, per the SPCR review. It's a myth that you "need" a 500 watt power supply, but 380 W is about the lowest model you can buy these days. The quality of the power supply is far more important than any arbitrary watt number printed on its side.

- Scythe Ninja heatsink

The Ninja, despite the goofy name, offers superlative performance. It is easily one of the all-time greatest heatsinks ever made, and still a top-rank performer. It's quite inexpensive these days, too. As you can see, I am running it fanless. The Ninja is particularly suited for passive operation because of the widely-spaced fins. It's easily cooled passively, even under overclocked, dual prime 95 load, by the 120 mm exhaust fan directly behind it. (Disclaimer: I have a giant heatsink fetish.)

- Dual passive GeForce 7600 GT 256 MB video cards

The 7600 GT was the runaway champ in the video card power/performance analysis research I did last summer. The model I chose is a passively cooled, dual slot design from Gigabyte (model NX76T256D). It offers outstanding performance, it runs cool, it has dual DVI, and the design is clever. I liked this card so much, I bought two of them. Not for SLI (although that's now an option) but for more than two monitors. It's inexpensive, too, at around $115 per card.

- 2 GB of generic PC800 DDR2

I don't believe in buying expensive memory. It's not worth it, unless you're an extreme overclocker. I buy cheap, reasonable quality memory. Even the cheap stuff overclocks fairly well, at least for the moderate overclocks I'm shooting for.

- 74 GB 10,000 RPM primary hard drive; 300 GB 7,200 RPM secondary hard drive

I cannot emphasize enough how big the performance difference is between 10,000 RPM drives and 7,200 RPM drives. I know it's a little expensive, but the merits of the faster drive, plus the flexibility of having two spindles in your system, makes it well worth the investment.

And it's quiet, too. The entire system is cooled by three fans: one 120mm exhaust, the 80mm fan in the power supply, and an optional 80 mm fan I installed in the front of the case to keep hard drive temperatures down. Airflow in the hard drive area is quite limited on the Vento.

One of the advantages of a D.I.Y. system is that you can perform relatively inexpensive upgrades instead of buying an entirely new computer. The most recent one was plopping in the new motherboard/cpu/memory/heatsink combo. With that upgrade, I now have a top of the line dual-core PC running at 3.0 GHz-- and it only cost me $650 to get there.

Discussion