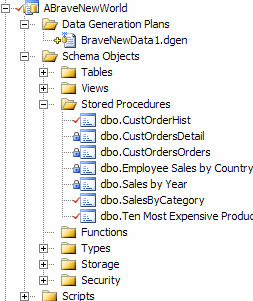

database management

Is Your Database Under Version Control?

When I ask development teams whether their database is under version control, I usually get blank stares. The database is a critical part of your application. If you deploy version 2.0 of your application against version 1.0 of your database, what do you get? A broken application. And