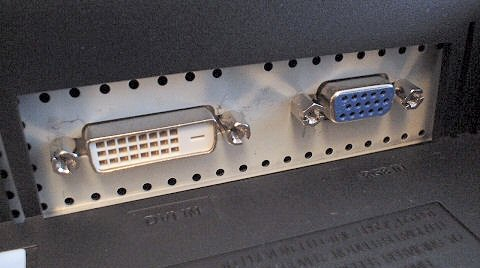

monitors

Will your next computer monitor be a HDTV?

Instead of one giant monitor, I’d rather have multiple moderately large monitors. I’m a card-carrying member of the prestigious three monitor club. But giant monitors have their charms, too; there is something to be said for an enormous, contiguous display area. But large monitors tend to be inordinately,