user experience

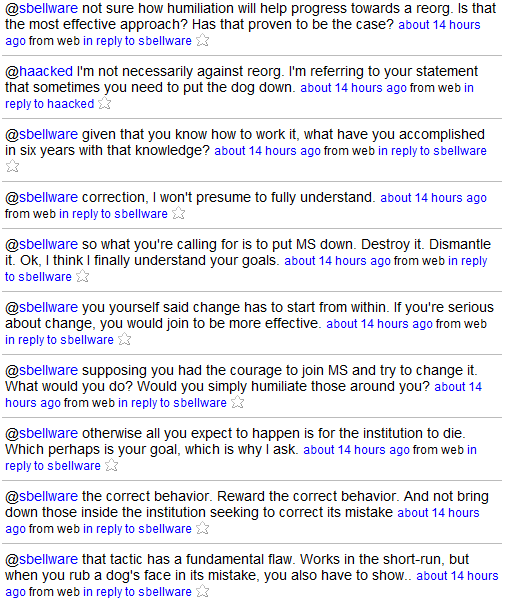

Death Threats, Intimidation, and Blogging

I miss Kathy Sierra. Kathy was the primary author of the Creating Passionate Users blog, which she started in December 2004. Her writing was of sufficient quality to propel her blog into the Technorati top 100 within a year and a half. That’s almost unheard of, particularly for a