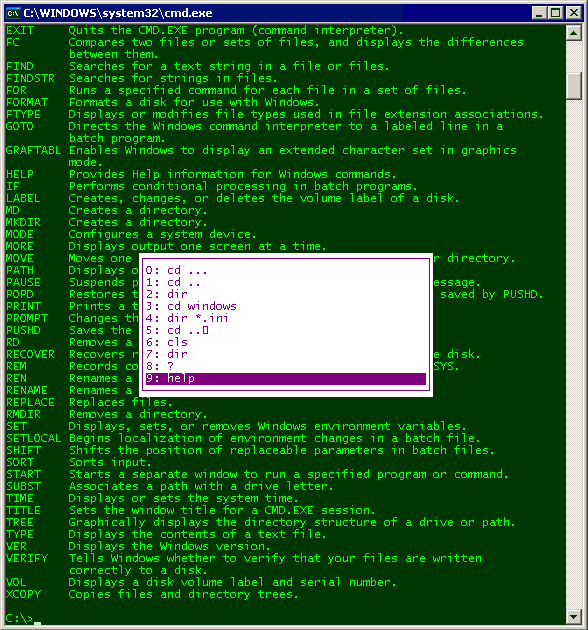

command-line

Stupid Command Prompt Tricks

Windows XP isn’t known for its powerful command line interface. Still, one of the first things I do on any fresh Windows install is set up the “Open Command Window Here” right click menu. And hoary old cmd.exe does have a few tricks up its sleeve that you