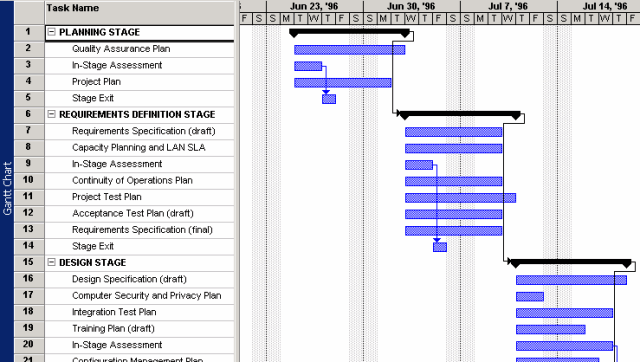

hardware

CPU vs. GPU

Intel’s latest quad-core CPU, the Core 2 Extreme QX6700, consists of 582 million transistors. That’s a lot. But it pales in comparison to the 680 million transistors of nVidia’s latest video card, the 8800 GTX. Here’s a small chart of transistor counts for recent CPUs and