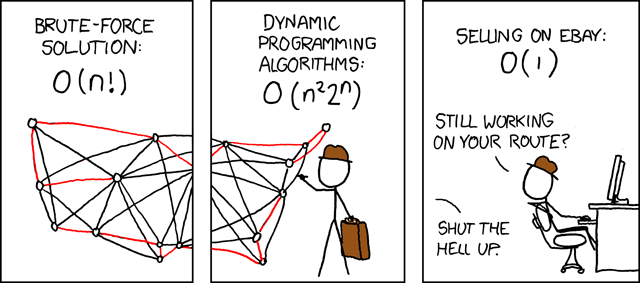

market trends

Profitable Until Deemed Illegal

I was fascinated to discover the auction hybrid site swoopo.com (previously known as telebid.com). It’s a strange combination of eBay, woot, and slot machine. Here’s how it works: * You purchase bids in pre-packaged blocks of at least 30. Each bid costs you 75 cents, with no