blogging

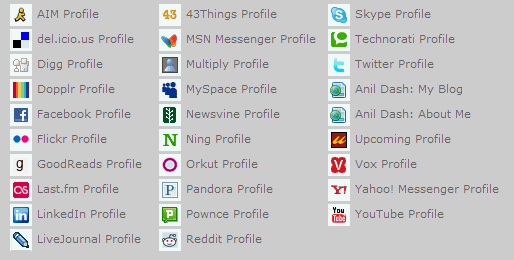

Our Fractured Online Identities

Anil Dash has been blogging since 1999. He’s a member of the Movable Type team from the earliest days. As you’d expect from a man who has lived in the trenches for so long, his blog is excellent. It’s well worth a visit if you haven’t