The Road Not Taken is Guaranteed Minimum Income

The dream is incomplete until we share it with our fellow Americans.

The following is drawn from a speech I delivered today at Cooper Union’s Great Hall in New York City, where I joined Lieutenant Colonel Alexander Vindman to discuss the future of the American Dream:

What is the American Dream?

In 1931, at the height of the Great Depression, James Truslow Adams first defined the American Dream as

“[...] a land in which life should be better and richer and fuller for everyone, with opportunity for each according to ability or achievement. [...] not a dream of motor cars and high wages merely, but a dream of social order in which [everyone] shall be able to attain to the fullest stature of which they are innately capable, and be recognized by others for what they are, regardless of the fortuitous circumstances of birth or position”

I wanted to know what these words meant to us today. I needed to know what parts of the American Dream we all still had in common. I had to make some sense of what was happening to our country. I’ve been writing on my blog since 2004, and on November 7th, I started writing the most difficult piece I have ever written.

I asked so many Americans to tell me what the American Dream personally meant to them, and I wrote it all down.

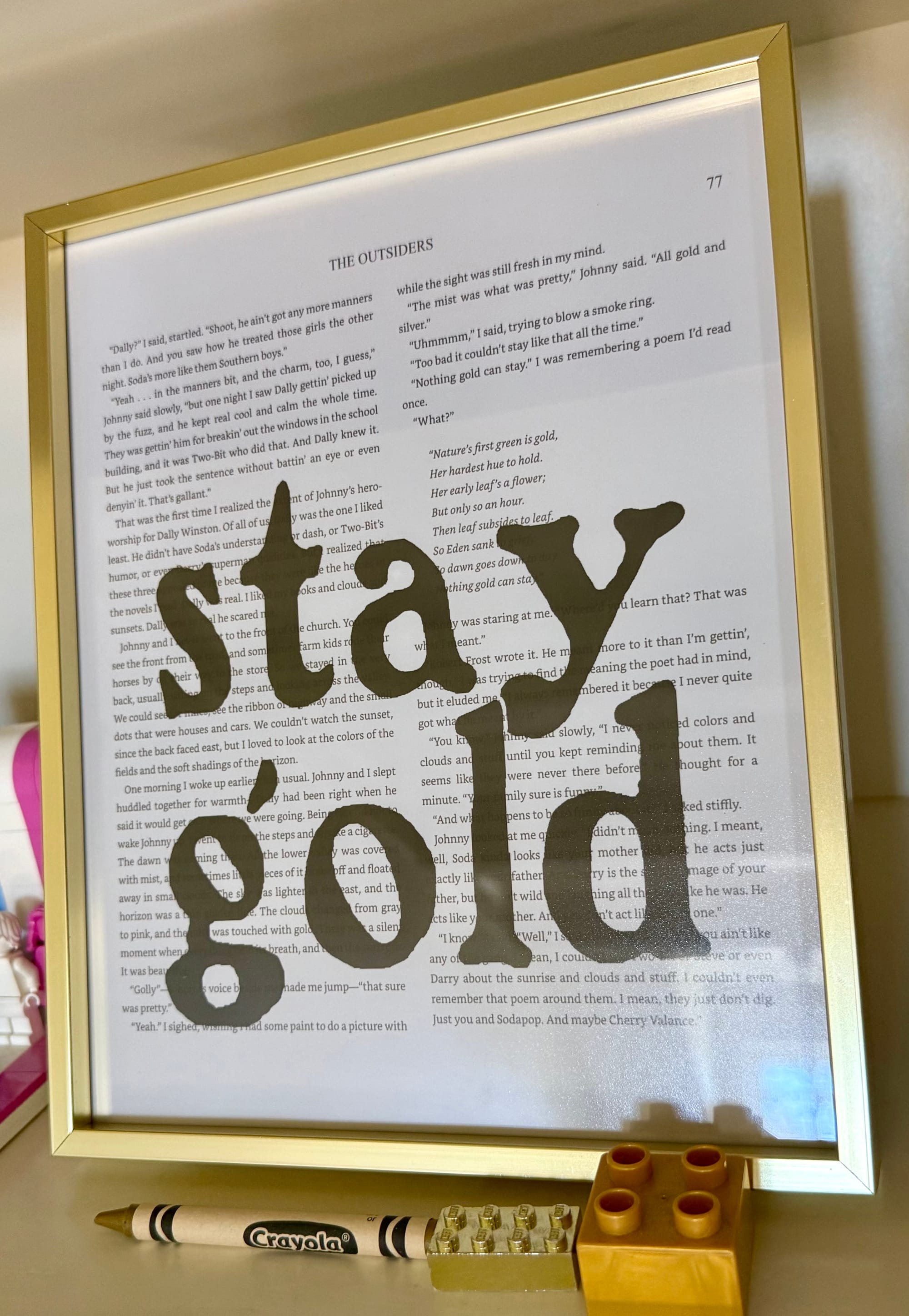

Later in November, I attended a theater performance of The Outsiders at my son’s public high school – an adaptation of the 1967 novel by S.E. Hinton. All I really knew was the famous “stay gold” line from the 1983 movie. But as I sat there in the audience among my neighbors, watching the complete story acted out in front of me by these teenagers, I slowly realized what “stay gold” meant: sharing the American Dream.

We cannot merely attain the Dream. The dream is incomplete until we share it with our fellow Americans. That act of sharing is the final realization of everything the dream stands for.

Thanks to S.E. Hinton, I finally had a name for my essay, “Stay Gold, America.” I published it on January 7th, with a Pledge to Share the American Dream.

In the first part of the Pledge, the short term, our family made eight 1 million dollar donations to the following nonprofit groups: Team Rubicon, Children’s Hunger Fund, PEN America, The Trevor Project, NAACP Legal Defense and Educational Fund, First Generation Investors, Global Refuge, and Planned Parenthood.

Beyond that, we made many additional one million dollar donations to reinforce our technical infrastructure in America – Wikipedia, The Internet Archive, The Common Crawl Foundation, Let’s Encrypt, pioneering independent internet journalism, and several other crucial open source software infrastructure projects that power much of the world today.

I encourage every American to contribute soon, however you can, to organizations you feel are effectively helping those most currently in need.

But short term fixes are not enough.

The Pledge To Share The American Dream requires a much more ambitious second act – deeper, long term changes that will take decades. Over the next five years, my family pledges half our remaining wealth to plant a seed toward foundational long term efforts ensuring that all Americans continue to have the same fair access to the American Dream.

Let me tell you about my own path to the American Dream. It was rocky. My parents were born into deep poverty in Mercer County, West Virginia, and Beaufort County, North Carolina. Our family eventually clawed our way to the bottom of the middle class in Virginia.

I won’t dwell on it, but every family has their own problems. We did not remain middle class for long. But through all this, my parents got the most important thing right: they loved me openly and unconditionally. That is everything. It’s the only reason I am standing here in front of you today.

With my family’s support, I managed to achieve a solid public education in Chesterfield County, Virginia, and had the incredible privilege of an affordable state education at the University of Virginia. This is a college uniquely rooted in the beliefs of one of the most prominent Founding Fathers, Thomas Jefferson. He was a living paradox. A man of profound ideals and yet flawed – trapped in the values of his time and place.

Still, he wrote “Life, liberty, and the pursuit of happiness” at the top of the Declaration of Independence. These words were, and still are, revolutionary. They define our fundamental shared American values, although we have not always lived up to them. The American Dream isn’t about us succeeding, alone, by ourselves, but about connecting with each other and succeeding together as Americans.

I’ve been concerned about wealth concentration in America ever since I watched a 2012 video by politizane illustrating just how extreme wealth concentration already was.

I had no idea how close we were to the American Gilded Age from the late 1800s. This period was given a name in the 1920s by historians referencing Mark Twain’s 1873 novel, The Gilded Age, A Tale of Today.

During this time, labor strikes often turned violent, with the Homestead Strike of 1892 resulting in deadly confrontations between workers and Pinkerton guards hired by factory owners. Rapid industrialization created hazardous working conditions in factories, mines, and railroads, where thousands died due to insufficient safety regulations and employers who prioritized profit over worker welfare.

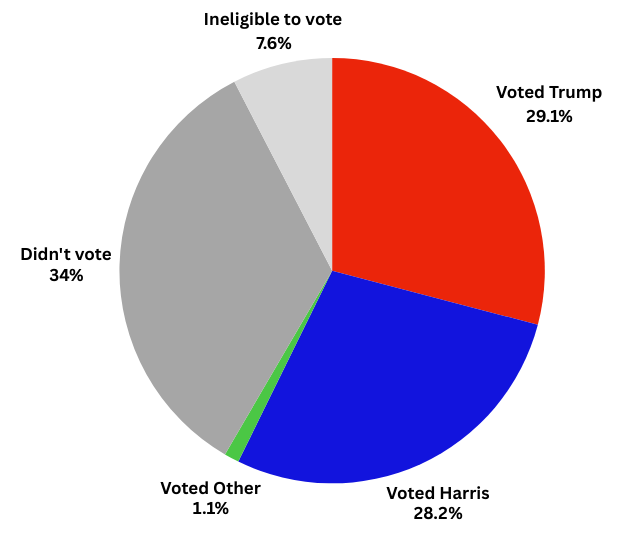

In January 2025, while I was still writing “Stay Gold, America”, we entered the period of greatest wealth concentration in the entirety of American history. As of 2021, the top 1% of households controlled 32% of all wealth, while the bottom 50% only have 2.6%. It’s difficult to find more recent data, but wealth concentration has only intensified in the last four years.

We can no longer say “Gilded Age.”

We must now say “The First Gilded Age.”

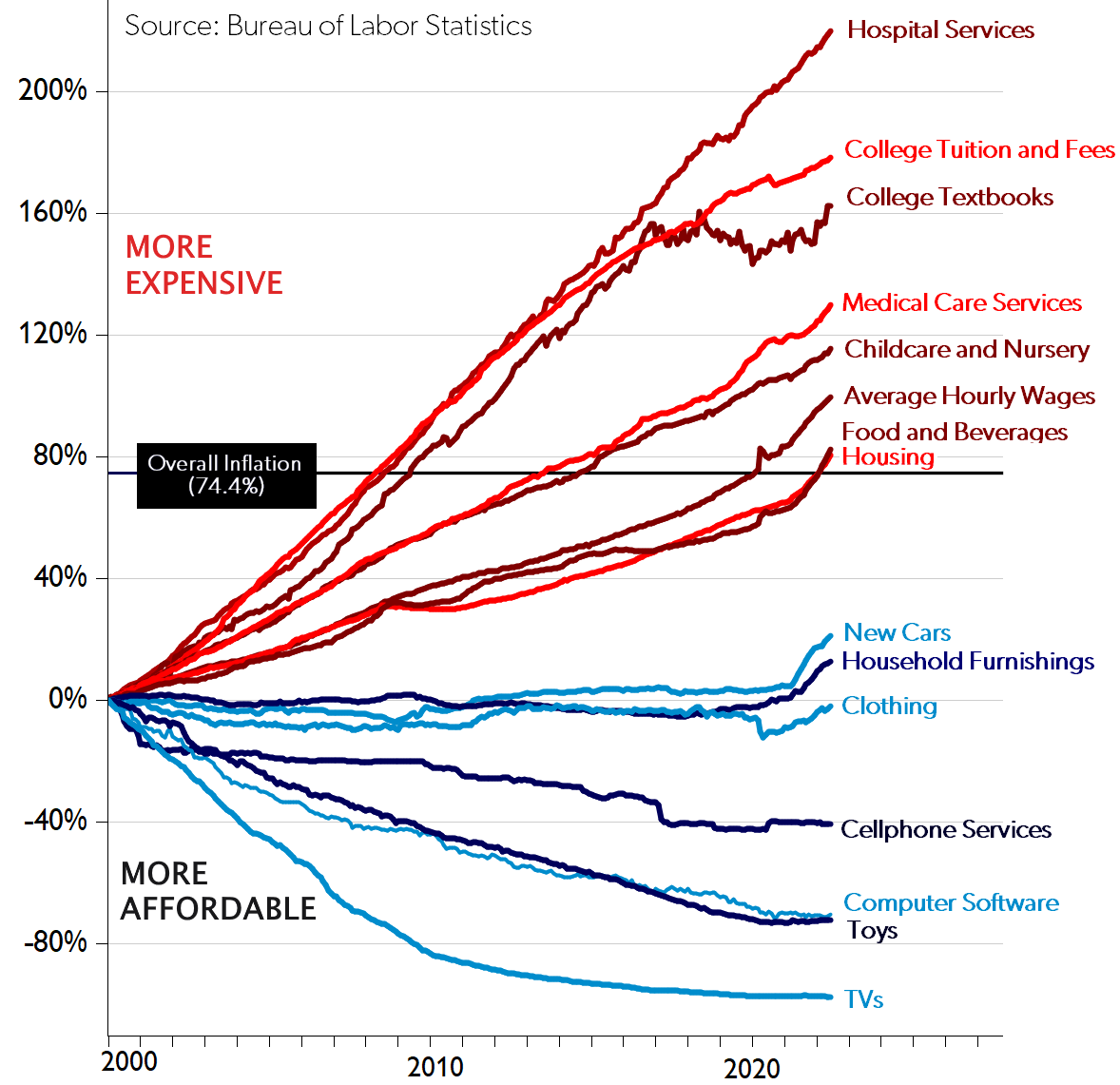

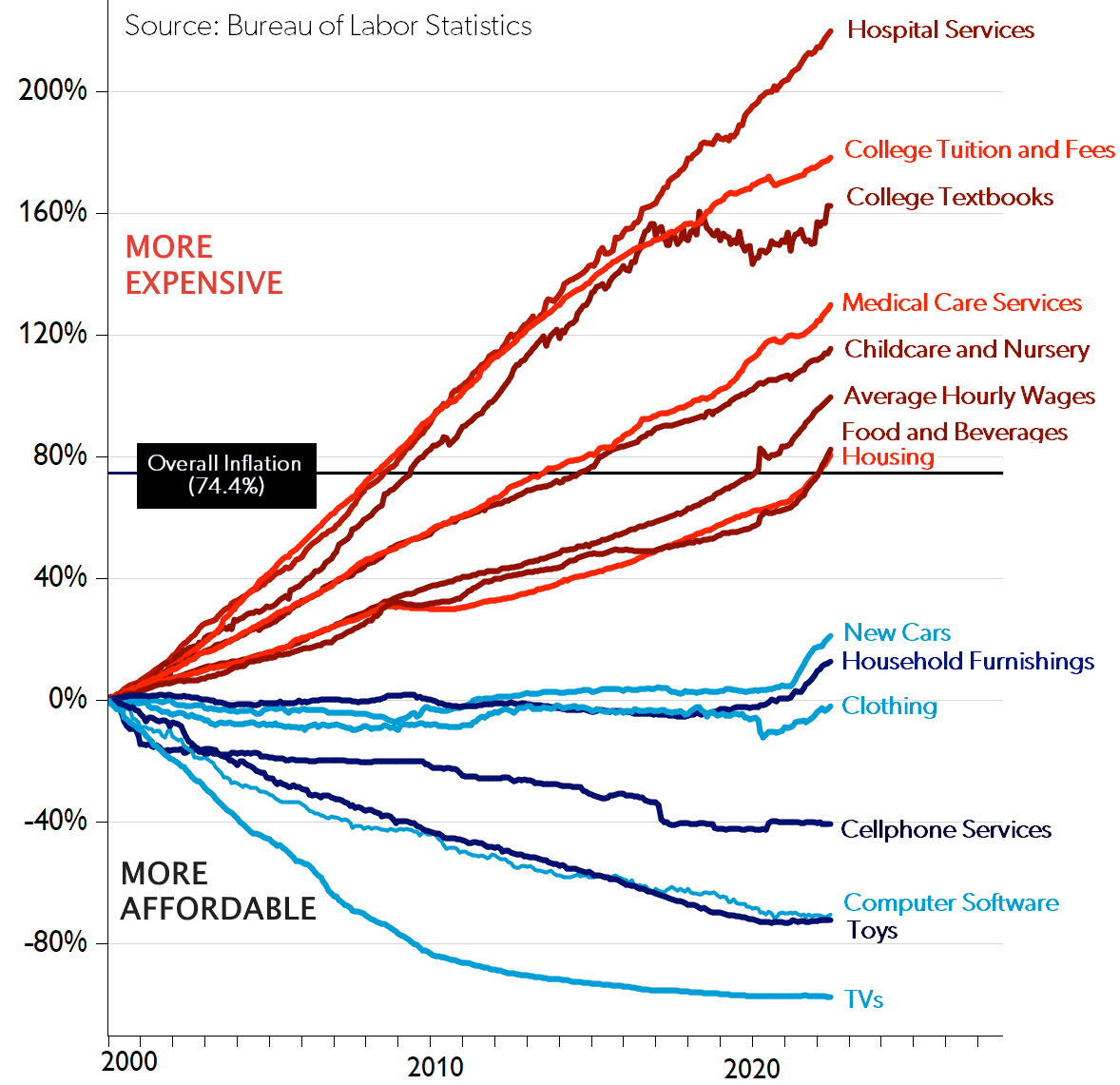

Today, in our second Gilded Age, more and more people find their path to the American Dream blocked. When Americans face unaffordable education, lack of accessible healthcare, or lack affordable housing, they aren’t just disadvantaged – they’re trapped, often burdened by massive debt. They have no stable foundation to build their lives. They watch desperately, working as hard as they can, while life simply passes them by, without even the freedom to choose their own lives.

They don’t have time to build a career. They don’t have time to learn, to improve. They don’t get to start a business. They can’t choose where their kids will grow up, or whether to have children at all, because they can’t afford to. Here in the land of opportunity, the pursuit of happiness has become an endless task for too many.

We are denying people any real chance of achieving the dream that we promised them – that we promised the entire world – when we founded this nation. It is such a profound betrayal of everything we ever dreamed about. Without a stable foundation to build a life on, our fellow Americans cannot even pursue the American Dream, much less achieve it.

I ask you this: as an American, what is the purpose of a dream left unshared with so many for so long? What’s happening to our dream? Are we really willing to let go of our values so easily? We’re Americans. We fight for our values, the values embodied in our dream, the ones we founded this country on.

Why aren’t we sharing the American Dream?

Why aren’t we giving everyone a fair chance at Life, Liberty, and the Pursuit of Happiness by providing them the fundamentals they need to get there?

The Dream worked for me, decades ago, and I deeply believe that the American Dream can still work for everyone – if we ensure every American has the same fair chance we did. The American Dream was never about a few people being extraordinarily wealthy. It’s about everyone having an equal chance to succeed and pursue their dreams – their own happiness. It belongs to them. I think we owe them at least that. I think we owe ourselves at least that.

What can we do about this? There are no easy answers. I can’t even pretend to have the answer, because there isn’t any one answer to give. Nothing worth doing is ever that simple. But I can tell you this: all the studies and all the data I’ve looked at have strongly pointed to one foundational thing we can do here in America over the next five years.

Natalie Foster, co-founder of the Economic Security Project, makes a powerful case for the idea that, with all this concentrated wealth, we can offer a Guaranteed Minimum Income in the poorest areas of this country – the areas of most need, where money goes the farthest – to unlock vast amounts of untapped American potential.

This isn’t a new idea. We’ve been doing this a while now in different forms, but we never called it Guaranteed Minimum Income.

In 1797, Thomas Paine proposed a retirement pension funded by estate taxes. It didn’t go anywhere, but it planted a seed. Much later we implemented the Social Security Act in 1935 . The economic chaos of the Great Depression coupled with the inability of private philanthropy to provide economic security inspired Franklin Roosevelt’s New Deal government programs. The most popular and effective program to emerge from this era was Social Security, providing a guaranteed income for retirees. Before Social Security, half of seniors lived in poverty. Today only 10% of seniors live in poverty.

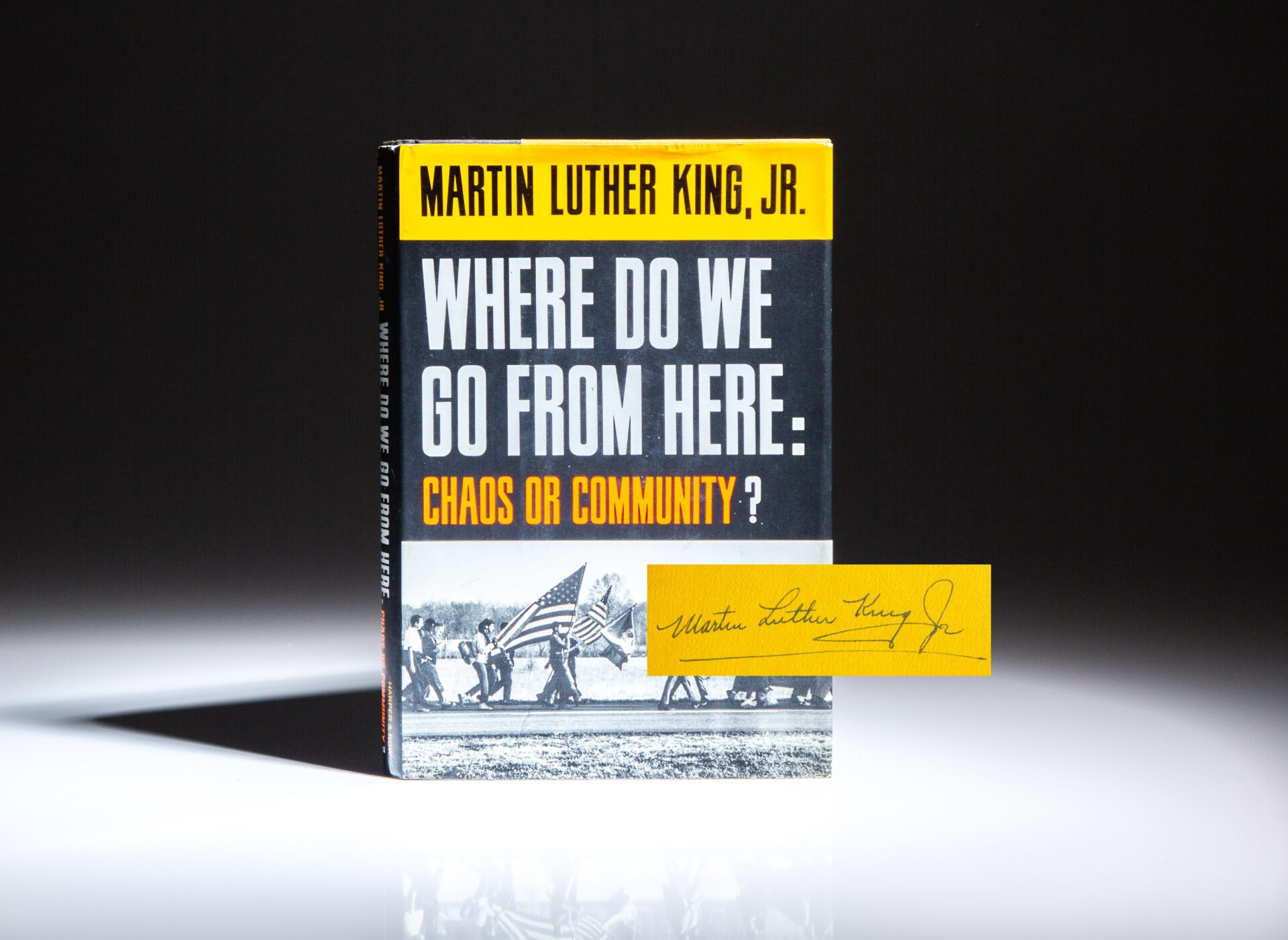

In his 1967 book Where Do We Go From Here: Chaos or Community, Martin Luther King Jr made the moral case for a form of UBI, Universal Basic Income. King believed that economic insecurity was at the root of all inequality. He stated that a guaranteed income — direct cash disbursements — was the simplest and best way to fight poverty.

In 1972, Congress established the Supplemental Security Income (SSI) program, providing direct cash assistance to low-income elderly, blind, and disabled individuals with little or no income. This cash can be used for food, housing, and medical expenses, the essentials for financial stability. As of January, 2025, over 7.3 million people receive SSI benefits.

In 1975, Congress passed the Tax Reduction Act, establishing the Earned Income Tax Credit. This tax credit benefits working-class parents with children, encouraging work by increasing the income of low-income workers. In 2023, it lifted about 6.4 million people out of poverty, including 3.4 million children. According to the Census Bureau, it is the second most effective anti-poverty tool after Social Security.

In 2019, directly inspired by King, mayor Michael Tubbs – at age 26, one of the youngest mayors in American history – launched the $3 million Stockton Economic Empowerment Demonstration. It provided 125 residents with $500 per month in unconditional cash payments for two years. The program found that recipients experienced improved financial stability, increased full-time employment, and enhanced well-being.

In my “Stay Gold, America” blog post, I referenced the Robert Frost “Stay Gold” poem and S.E. Hinton’s famous famous novel The Outsiders, urging us to retain our youthful ideals as we grow older. Ideals embodied in the American Dream.

Which brings us to another Robert Frost poem, The Road Not Taken. Our proposal to ensure access to the American Dream is to follow the path less travelled by: Guaranteed Minimum Income. GMI is a simpler, more practical, more scalable plan to directly address the root of economic insecurity with minimum bureaucracy.

We are partnering with GiveDirectly, who oversaw the most GMI studies in the United States, and OpenResearch, who just completed the largest, most detailed GMI study ever conducted in this country in 2023. We are working together to launch a new Guaranteed Minimum Income initiative in rural American communities.

Network effects within communities explain why equality of opportunity is so effective, and why a shared American Dream is the most powerful dream of all. The potential of the American Dream becomes vastly greater as more people have access to it, because they share it.

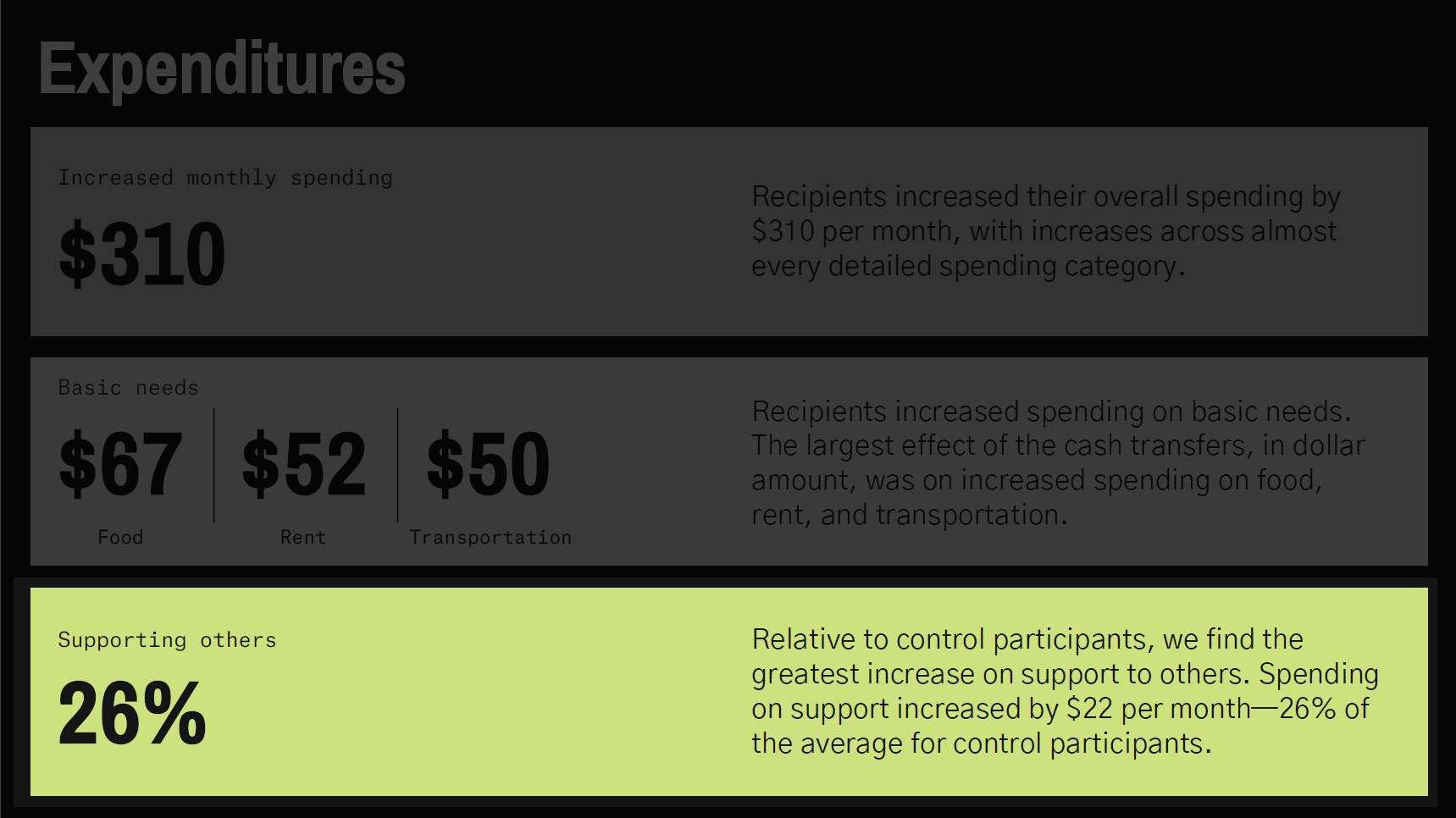

They share it with their families, their friends, and their neighbors. The groundbreaking, massive 2023 OpenResearch UBI study data showed that when you give money to the poorest among us, they consistently go out of their way to share that money with others in desperate need.

The power of opportunity is not in what it can do for one person, but how it connects and strengthens bonds between people. When you empower a couple, you allow them to build a family. When you empower families, you allow them to build a community. When you guarantee fundamentals, you’re providing a foundation for those connections to grow and thrive. This is the incredible power and value of community. That is what we are investing in – each other.

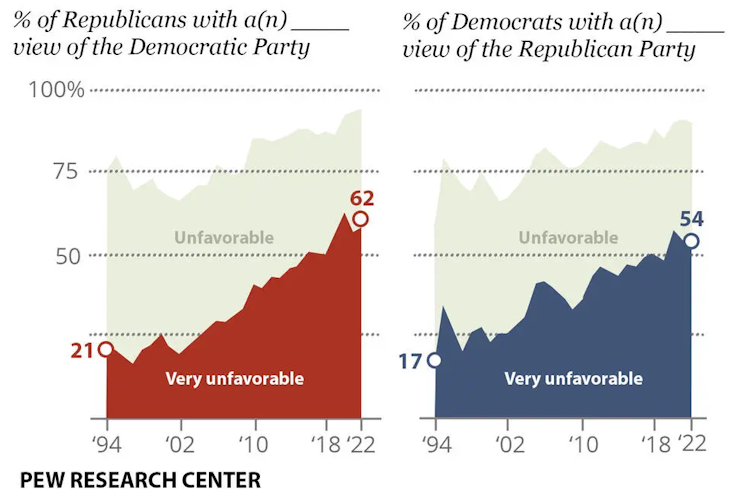

A system where there are no guarantees creates conflict. It creates inequality. A massive concentration of wealth in so few hands weakens connections between us and prevents new ones. America began as a place of connection. Millions of us came together to build this nation, not individually, but together. Equality is connection, and connection is more valuable than any product any company will ever sell you.

Why focus on rural communities? There are consistently higher poverty rates in rural counties, with fewer job opportunities, lower wages, and worse access to healthcare and education. It’s not a new problem, either — places like Appalachia, the Mississippi Delta, and American Indian reservations have been stuck in poverty for decades, with some counties like Oglala Lakota, SD (55.8%) and McDowell, WV (37.6%) hitting extreme levels. Meanwhile, urban counties rarely see numbers that high. The data from the US Census and USDA Economic Research Service make it clear: if you’re poor in America, being rural makes it even harder to escape.

Rural areas also offer smaller populations, which is helpful because we need to start small with lots of tightly controlled studies that we can carefully scale and improve on for larger areas. We hope to build a large body of scientific data showing that GMI really does improve the lives, and the communities, of our fellow Americans.

The initial plan is to target a few counties that I have a personal connection to, and are still currently in poverty, decades later:

- My father was born in Mercer County, West Virginia, where the collapse of coal mining left good people struggling to survive. Their living and their way of life is now all but gone, and good jobs are hard to find.

- My mother’s birthplace, Beaufort County, North Carolina, has been hit just as hard, with farming and factory jobs disappearing and families left wondering what’s next.

- Our third county is yet to be decided, but will be a community also facing the same systemic, generational obstacles to economic stability and achieving the American Dream.

We will work with existing local groups to coordinate GMI studies where community members choose to enroll. We will conduct outreach and and provide mentorship to these opt-in study participants. It will be teamwork between Americans.

We hope Veterans will play a crucial role in our effort. We plan to work with local communities and veteran-serving organizations to engage veterans to support and execute our GMI programs – the same veterans who served our country with distinction, returning home with exceptional leadership skills and a deep commitment to their communities. Their involvement ensures these programs reflect core American values of self-reliance and community service to fellow Americans.

We’ll also partner with established community organizations — churches, civic groups, community colleges, local businesses. These partnerships help integrate our GMI studies with existing support systems, rather than creating new ones.

GiveDirectly and OpenResearch will build on their existing body of work, gathering extensive data from these refined studies. We’ll measure employment, entrepreneurship, education, health, and community engagement. We’ll conduct regular interviews with participants to understand their experience. How is this working for you? How can we make it better? You tell us. How can we make it better together?

Economic security isn’t only about individual well-being – it’s the bedrock of democracy. When people aren’t constantly worried about feeding themselves, feeding their family, having decent healthcare, having a place to live… we have given them room to breathe. We have given them freedom. The freedom to raise their children, the freedom to start businesses, the freedom to choose where they work, the freedom to volunteer... the freedom to vote.

This isn’t about ideology or government. It’s about us, as Americans, working together to invest in our future – possibly the greatest unlocking of human potential in our entire history. I do not say these things lightly. I’ve seen it work. I’ve looked at all the existing study data. A little bit of money is incredibly transformational for people in poverty – the people who need it the most – the people who cannot live up to their potential because they’re so busy simply trying to survive. Imagine what they could do if we gave them just a little breathing room.

GMI is a long term investment in the future of what America should be, the way we wrote it down in the Declaration of Independence, perhaps incompletely – but our democracy was always meant to be malleable, to change, to adapt, and improve.

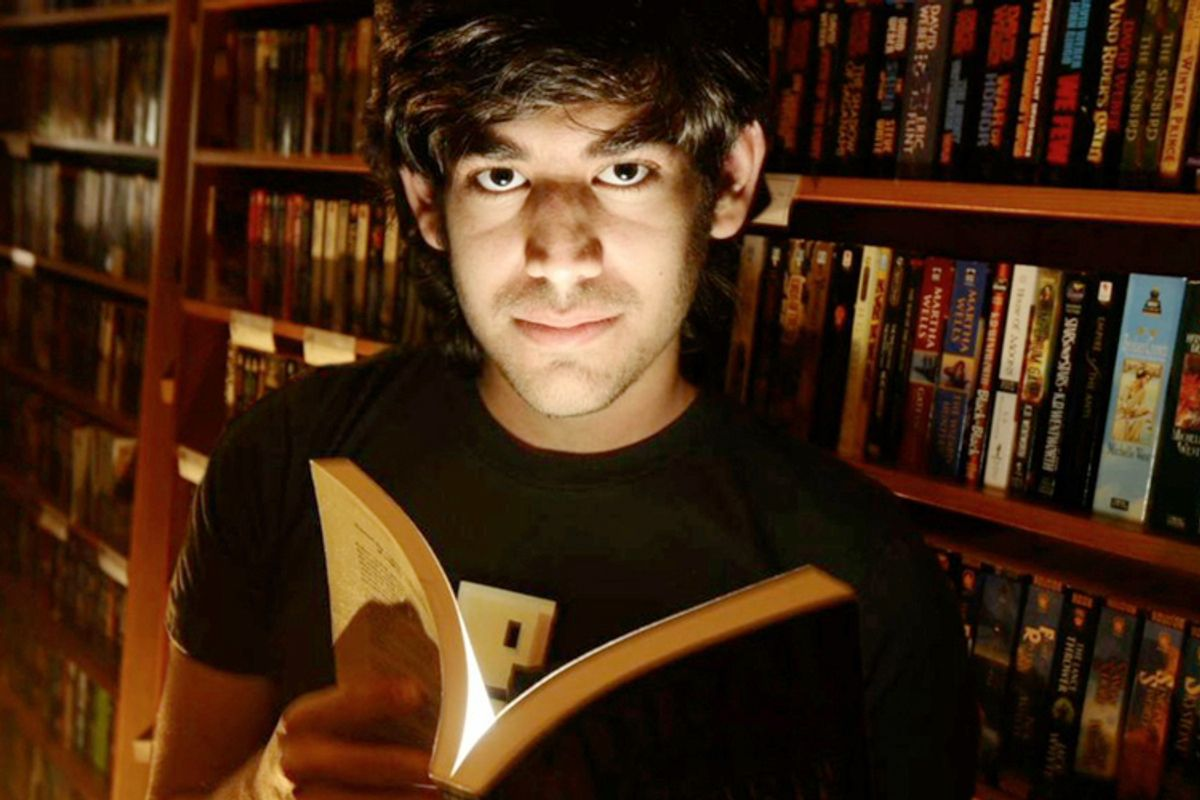

I’d like to conclude by mentioning Aaron Swartz. He was a precocious teenage programmer much like myself. Aaron helped develop RSS web feeds, co-founded Reddit, and worked with Creative Commons to create flexible copyright licenses for the common good. He used technology to make information universally accessible to everyone.

Aaron created a system to download public domain court documents from PACER, a government database that charged fees for accessing what he believed should be freely available public information. A few years later, while visiting MIT under their open campus policy and as a research fellow at Harvard, he used MIT’s network to download millions of academic articles from JSTOR, another fee-charging online academic journal repository, intending to make this knowledge freely accessible. Since taxpayers had funded much of this research, why shouldn’t that knowledge be freely available to everyone?

What Aaron saw as an act of academic freedom and information equality, authorities viewed as a crime—he was arrested in January 2011 and charged with multiple felonies for what many considered to be nothing more than accessing knowledge that should have been freely available to the public in the first place.

Despite JSTOR declining to pursue charges and MIT eventually calling for leniency, federal prosecutors aggressively pursued felony charges against Aaron with up to 35 years in prison. Facing overwhelming legal pressure and the prospect of being labeled a felon, Aaron took his own life at 26. This sparked widespread criticism of prosecutorial overreach and prompted discussions about open access to information. Deservedly so. Eight days later, in this very hall, there was a standing room only memorial service praising Aaron for his commitment to the public good.

Aaron pursued what was right for we, the people. He chose to build the public good despite knowing there would be risks. He chose to be an activist. I think we should all choose to be activists, to be brave, to stand up for our defining American principles.

There are two things I ask of you today.

- Visit givedirectly.org/rural-us where we’ll be documenting our journey and findings from the initial three GMI rural county studies. Let’s find out together how guaranteed minimum income can transform American lives.

- Talk about Guaranteed Minimum Income in your communities. Meet with your state and local officials. Share the existing study data. Share outcomes. Ask them about conducting GMI studies like ours in your area. We tell ourselves stories about why some people succeed and others don’t. Challenge those stories. Economic security is not charity. It is an investment in vast untapped American potential in the poorest areas of this country.

My family is committing 50 million dollars to this endeavor, but imagine if we had even more to share. Imagine how much more we could do, if we build this together, starting today. Decades from now, people will look back and wonder why it took us so long to share our dream of a better, richer, and fuller life with our fellow Americans.

I hope you join us on this grand experiment to share our American Dream. I believe everyone deserves a fair chance at what was promised when we founded this nation: Life, Liberty, and the pursuit of The American Dream.