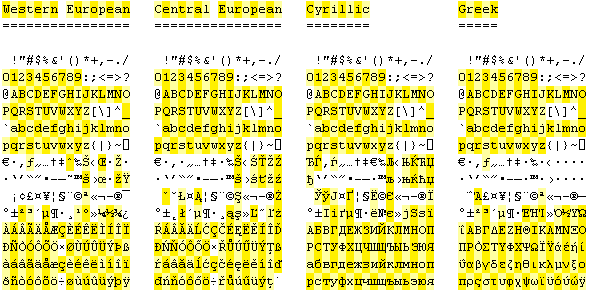

Scott Hanselman thinks signing your name with a bunch of certifications is gauche:

If it's silly to suggest putting my SATs on my resume, why is …

Scott Hanselman, MCSD, MCT, MCP, MC*.*

… reasonable? Having a cert means you have a capacity to hold lots of technical stuff in your head. Full stop. I propose we sign our names like this:

Scott Hanselman, 11 Successful Large Projects, 3 Open Source Applications, 1 Colossal Failure

Wouldn't that be nice?

I agree. Your credentials should be the sum of the projects you've worked on. But I think Scott has this backwards: you should emphasize the number of failed projects you've worked on.

How do we define "success", anyway? What were the goals? Did the project make money? Did users like the software? Is the software still in use? It's a thorny problem. I used to work in an environment where every project was judged a success. Nobody wanted to own up to the limitations, compromises, and problems in the software they ended up shipping. And the managers in charge of the projects desperately wanted to be perceived as successful. So what we got was the special olympics of software: every project was a winner. The users, on the other hand, were not so lucky.

Success is relative and ephemeral. But failure is a near-constant. If you really want to know if someone is competent at their profession, ask them about their failures. Last year I cited an article on predicting the success or failure of surgeons:

Charles Bosk, a sociologist at the University of Pennsylvania, once conducted a set of interviews with young doctors who had either resigned or been fired from neurosurgery-training programs, in an effort to figure out what separated the unsuccessful surgeons from their successful counterparts.

He concluded that, far more than technical skills or intelligence, what was necessary for success was the sort of attitude that Quest has – a practical-minded obsession with the possibility and the consequences of failure.

"When I interviewed the surgeons who were fired, I used to leave the interview shaking," Bosk said. "I would hear these horrible stories about what they did wrong, but the thing was that they didn't know that what they did was wrong. In my interviewing, I began to develop what I thought was an indicator of whether someone was going to be a good surgeon or not. It was a couple of simple questions: Have you ever made a mistake? And, if so, what was your worst mistake? The people who said, 'Gee, I haven't really had one,' or, 'I've had a couple of bad outcomes but they were due to things outside my control' – invariably those were the worst candidates. And the residents who said, 'I make mistakes all the time. There was this horrible thing that happened just yesterday and here's what it was.' They were the best. They had the ability to rethink everything that they'd done and imagine how they might have done it differently."

The best software developers embrace failure – in fact, they're obsessed with failure. If you forget how easy it is to make critical mistakes, you're likely to fail. And that should concern you.

Michael Hunter takes this concept one step beyond mere vigilance. He encourages us to fail early and often:

If you're lucky, however, your family encourages you to fail early and often. If you're really lucky your teachers do as well. It takes a lot of courage to fight against this, but the rewards are great. Learning doesn't happen from failure itself but rather from analyzing the failure, making a change, and then trying again. Over time this gives you a deep understanding of the problem domain (be that programming or combining colors or whatever) - you are learning. Exercising your brain is good in its own right ("That which is not exercised atrophies", my trainer likes to say), plus this knowledge improves your chances at functioning successfully in new situations.

I say the more failed projects in your portfolio, the better. If you're not failing some of the time, you're not trying hard enough. You need to overreach to find your limits and grow. But do make sure you fail in spectacular new ways on each subsequent project.

Discussion