Intel's latest quad-core CPU, the Core 2 Extreme QX6700, consists of 582 million transistors. That's a lot. But it pales in comparison to the 680 million transistors of nVidia's latest video card, the 8800 GTX. Here's a small chart of transistor counts for recent CPUs and GPUs:

| AMD Athlon 64 X2 | CPU | 154 m

|

| Intel Core 2 Duo | CPU | 291 m

|

| Intel Pentium D 900 | CPU | 376 m

|

| ATI X1950 XTX | GPU | 384 m

|

| Intel Core 2 Quad | CPU | 582 m

|

| NVIDIA G8800 GTX | GPU | 680 m

|

ATI won't release a new video card until next year. But their current X1950 XTX isn't exactly chopped liver: 384 million transistors is more than any current dual-core CPU.

Of course, comparing GPUs to CPUs isn't an apples-to-apples comparison. The clock rates are lower, the architectures are radically different, and the problems they're trying to solve are almost completely unrelated. But GPUs now exceed the complexity of modern CPUs in terms of absolute transistor count. And like CPUs, they're becoming programmable-- it's possible to harness all that graphics power to do something other than graphics.

There's a nice overview on AnandTech which provides some background on this architectural sea change in video cards:

So far, the only types of programs that have effectively tapped GPU power-- other than the obvious applications and games requiring 3D rendering-- have also been video related: video decoders, encoders, video effect processors, and so forth. But there are many non-video tasks that are floating-point intensive, and these programs have been unable to harness the power of the GPU.

Meanwhile, the academic world has designed and utilized custom-built floating-point research hardware for years. These devices are known as stream processors. Stream processors are extremely powerful floating-point processors able to process whole blocks of data at once, whereas CPUs carry out only a handful of numerical operations at a time. We've seen CPUs implement some stream processing with instruction sets like SSE and 3DNow!, but these efforts pale in comparison to what custom hardware has been able to do.

3D rendering is also a streaming task. Modern GPUs have evolved into stream processors, sharing much in common with the customized hardware of researchers. GPU designers have cut corners where they don't need certain functionality for 3D rendering, but they have ultimately developed extremely fast and flexible stream processors. Modern GPUs are just as fast as custom hardware, but due to economies of scale are many, many times cheaper than custom hardware.

Dedicated, task-specific hardware is orders of magnitude faster than what you can achieve with a general purpose CPU. If you need proof of this, just look at the chess benchmarks. IBM's Deep Blue was capable of evaluating 200 million chess moves per second in 1997. Ten years later, the fastest quad-core desktop system can only evaluate 8 million chess moves per second. Ten year old custom hardware is still 25 times faster than the best general purpose CPUs. Amazing.

The most high profile application for all this GPU power at the moment is Stanford's Folding@Home. There's no shortage of exciting PR on this topic:

The processing power of just 5,000 ATI processors is also enough to rival that of the existing 200,000 computers currently involved in the Folding@home project; and it is estimated that if a mere 10,000 computers were to each use an ATI processor to conduct folding research, that the Folding@home program would effectively perform faster than the fastest supercomputer in existence today, surpassing the 1 petaFLOP level.

Stanford recently introduced a high performance folding client which runs on ATI's X1800 and X1900 series video cards. TechReport tested the new high performance folding client and came away a little disappointed:

Over five days, our Radeon X1900 XTX crunched eight work units for a total or 2,640 points. During the same period, our single Opteron 180 core chewed its way through six smaller work units for a score of 899 -- just about one third the point production of the Radeon. However, had we been running the CPU client on both of our system's cores, the point output should have been closer to 1800, putting the Radeon ahead by less than 50%.

The GPU may be doing 20 to 40 times more work, but the scores are calibrated to a baseline system, not the absolute amount of work that's done. It's a little anticlimactic.

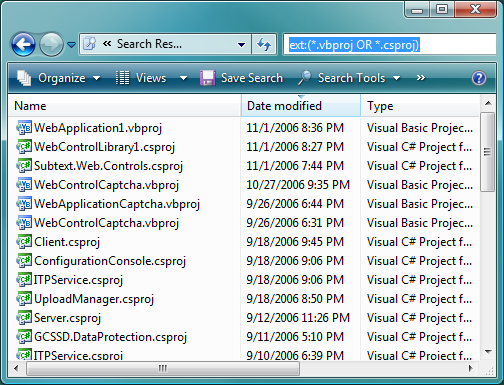

Stanford's advanced folding client exploits the Brook Language, an extension to ANSI C that allows them to compile C-like code that runs on the GPU. It leverages ATI's Stream API to communicate with the GPU. NVIDIA offers something similar to Brook in their CUDA technology:

GPU computing with CUDA technology is an innovative combination of computing features in next generation NVIDIA GPUs that are accessed through a standard C language. Where previous generation GPUs were based on "streaming shader programs", CUDA programmers use C to create programs called threads that are similar to multi-threading programs on traditional CPUs. In contrast to multi-core CPUs, where only a few threads execute at the same time, NVIDIA GPUs featuring CUDA technology process thousands of threads simultaneously enabling a higher capacity of information flow.

Of course, CUDA only works on the latest G80 series of cards, just like the ATI's Stream technology is really only useful on their latest X1900 series. All this potential programmability is a very recent development.

I expect the relationship between CPU and GPU to largely be a symbiotic one: they're good at different things. But I also expect quite a few computing problems to make the jump from CPU to GPU in the next 5 years. The potential order-of-magnitude performance improvements are just too large to ignore.

Discussion