Parsing Html The Cthulhu Way

Among programmers of any experience, it is generally regarded as A Bad Ideatm to attempt to parse HTML with regular expressions. How bad of an idea? It apparently drove one Stack Overflow user to the brink of madness:

You can't parse [X]HTML with regex. Because HTML can't be parsed by regex. Regex is not a tool that can be used to correctly parse HTML. As I have answered in HTML-and-regex questions here so many times before, the use of regex will not allow you to consume HTML. Regular expressions are a tool that is insufficiently sophisticated to understand the constructs employed by HTML. HTML is not a regular language and hence cannot be parsed by regular expressions. Regex queries are not equipped to break down HTML into its meaningful parts. so many times but it is not getting to me. Even enhanced irregular regular expressions as used by Perl are not up to the task of parsing HTML. You will never make me crack. HTML is a language of sufficient complexity that it cannot be parsed by regular expressions. Even Jon Skeet cannot parse HTML using regular expressions. Every time you attempt to parse HTML with regular expressions, the unholy child weeps the blood of virgins, and Russian hackers pwn your webapp. Parsing HTML with regex summons tainted souls into the realm of the living. HTML and regex go together like love, marriage, and ritual infanticide. The <center> cannot hold it is too late. The force of regex and HTML together in the same conceptual space will destroy your mind like so much watery putty. If you parse HTML with regex you are giving in to Them and their blasphemous ways which doom us all to inhuman toil for the One whose Name cannot be expressed in the Basic Multilingual Plane, he comes. HTML-plus-regexp will liquify the nerves of the sentient whilst you observe, your psyche withering in the onslaught of horror. Rege̿̔̉x-based HTML parsers are the cancer that is killing StackOverflow it is too late it is too late we cannot be saved the transgression of a chi͡ld ensures regex will consume all living tissue (except for HTML which it cannot, as previously prophesied) dear lord help us how can anyone survive this scourge using regex to parse HTML has doomed humanity to an eternity of dread torture and security holes using regex as a tool to process HTML establishes a breach between this world and the dread realm of c͒ͪo͛ͫrrupt entities (like SGML entities, but more corrupt) a mere glimpse of the world of regex parsers for HTML will instantly transport a programmer's consciousness into a world of ceaseless screaming, he comes, the pestilent slithy regex-infection will devour your HTML parser, application and existence for all time like Visual Basic only worse he comes he comes do not fight he com̡e̶s, ̕h̵is un̨ho͞ly radiańcé destro҉ying all enli̍̈́̂̈́ghtenment, HTML tags lea͠ki̧n͘g fr̶ǫm ̡yo͟ur eye͢s̸ ̛l̕ik͏e liquid pain, the song of re̸gular expression parsing will extinguish the voices of mortal man from the sphere I can see it can you see ̲͚̖͔̙î̩́t̲͎̩̱͔́̋̀ it is beautiful the final snuffing of the lies of Man ALL IS LOŚ͖̩͇̗̪̏̈́T ALL IS LOST the pon̷y he comes he c̶̮omes he comes the ichor permeates all MY FACE MY FACE ᵒh god no NO NOO̼OO NΘ stop the an*̶͑̾̾̅ͫ͏̙̤g͇̫͛͆̾ͫ̑͆l͖͉̗̩̳̟̍ͫͥͨe̠̅s͎a̧͈͖r̽̾̈́͒͑enot rè̑ͧ̌aͨl̘̝̙̃ͤ͂̾̆ ZA̡͊͠͝LGΌ ISͮ̂҉̯͈͕̹̘̱ TO͇̹̺ͅƝ̴ȳ̳ TH̘Ë͖́̉ ͠P̯͍̭O̚N̐Y̡ H̸̡̪̯ͨ͊̽̅̾̎Ȩ̬̩̾͛ͪ̈́̀́͘ ̶̧̨̱̹̭̯ͧ̾ͬC̷̙̲̝͖ͭ̏ͥͮ͟Oͮ͏̮̪̝͍M̲̖͊̒ͪͩͬ̚̚͜Ȇ̴̟̟͙̞ͩ͌͝S̨̥̫͎̭ͯ̿̔̀ͅ

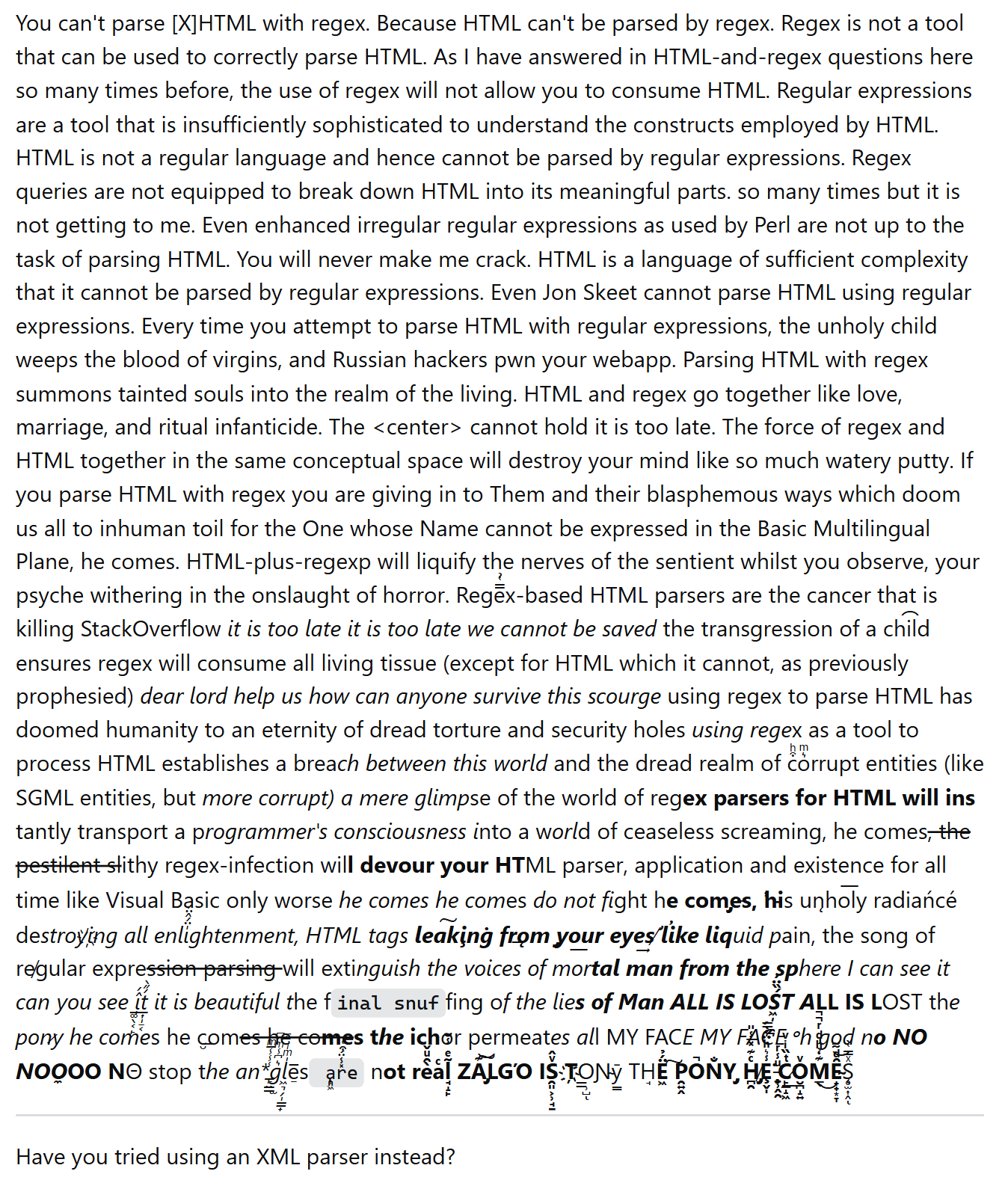

The unicode action in the post is the best part of the gag. If you can't see it for some reason, here's a screenshot just in case:

That’s right, if you attempt to parse HTML with regular expressions, you’re succumbing to the temptations of the dark god Cthulhu’s… er… code.

This is all good fun, but the warning here is only partially tongue in cheek, and it is born of a very real frustration.

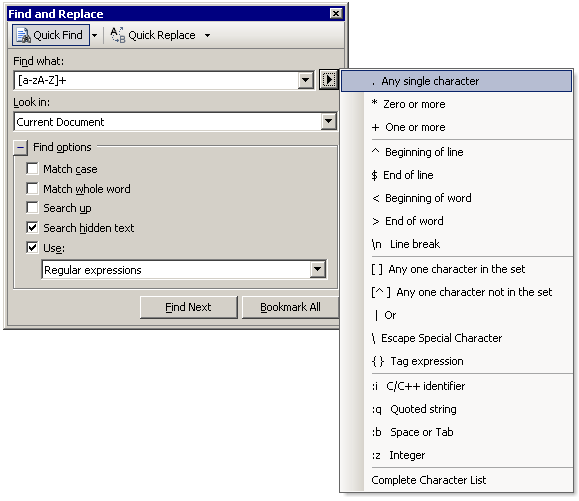

I have heard this argument before. Usually, I hear it as justification for seeing something like the following code:

# pull out data between <td> tags ($table_data) = $html =~ /<td>(.*?)</td>/gis;“But, it works!” they say.

“It’s easy!”

“It’s quick!”

“It will do the job just fine!”

I berate them for not being lazy. You need to be lazy as a programmer. Parsing HTML is a solved problem. You do not need to solve it. You just need to be lazy. Be lazy, use CPAN and use HTML::Sanitizer. It will make your coding easier. It will leave your code more maintainable. You won’t have to sit there hand-coding regular expressions. Your code will be more robust. You won’t have to bug fix every time the HTML breaks your crappy regex.

For many novice programmers, there’s something unusually seductive about parsing HTML the Cthulhu way instead of, y’know, using a library like a sane person. Which means this discussion gets reopened almost every single day on Stack Overflow. The above post from five years ago could be a discussion from yesterday. I think we can forgive a momentary lapse of reason under the circumstances.

Like I said, this is a well understood phenomenon in most programming circles. However, I was surprised to see a few experienced programmers in metafilter comments actually defend the use of regular expressions to parse HTML. I mean, they’ve heeded the Call of Cthulhu… and liked it.

Many programs will neither need to, nor should, anticipate the entire universe of HTML when parsing. In fact, designing a program to do so may well be a completely wrong-headed approach, if it changes a program from a few-line script to a bulletproof commercial-grade program which takes orders of magnitude more time to properly code and support. Resource expenditure should always (oops, make that very frequently, I about overgeneralized, too) be considered when creating a programmatic solution.

In addition, hard boundaries need not always be an HTML-oriented limitation. They can be as simple as “work with these sets of web pages,” “work with this data from these web pages,” “work for 98% users 98% of the time,” or even “OMG, we have to make this work in the next hour, do the best you can.”

We live in a world full of newbie PHP developers doing the first thing that pops into their collective heads, with more born every day. What we have here is an ongoing education problem. The real enemy isn’t regular expressions (or, for that matter, goto,) but ignorance. The only crime being perpetrated is not knowing what the alternatives are.

So, while I may attempt to parse HTML using regular expressions in certain situations, I go in knowing that:

- It’s generally a bad idea.

- Unless you have discipline and put very strict conditions on what you’re doing, matching HTML with regular expressions rapidly devolves into madness, just how Cthulhu likes it.

- I had what I thought to be good, rational, (semi) defensible reasons for choosing regular expressions in this specific scenario.

It’s considered good form to demand that regular expressions be considered verboten, totally off limits for processing HTML, but I think that’s just as wrongheaded as demanding every trivial HTML processing task be handled by a full-blown parsing engine. It’s more important to understand the tools, and their strengths and weaknesses, than it is to knuckle under to knee-jerk dogmatism.

So, yes, generally speaking, it is a bad idea to use regular expressions when parsing HTML. We should be teaching neophyte developers that, absolutely. Even though it’s an apparently never ending job. But we should also be teaching them the very real difference between parsing HTML and the simple expedience of processing a few strings. And how to tell which is the right approach for the task at hand.

Whatever method you choose – just don’t leave the <cthulhu> tag open, for humanity’s sake.